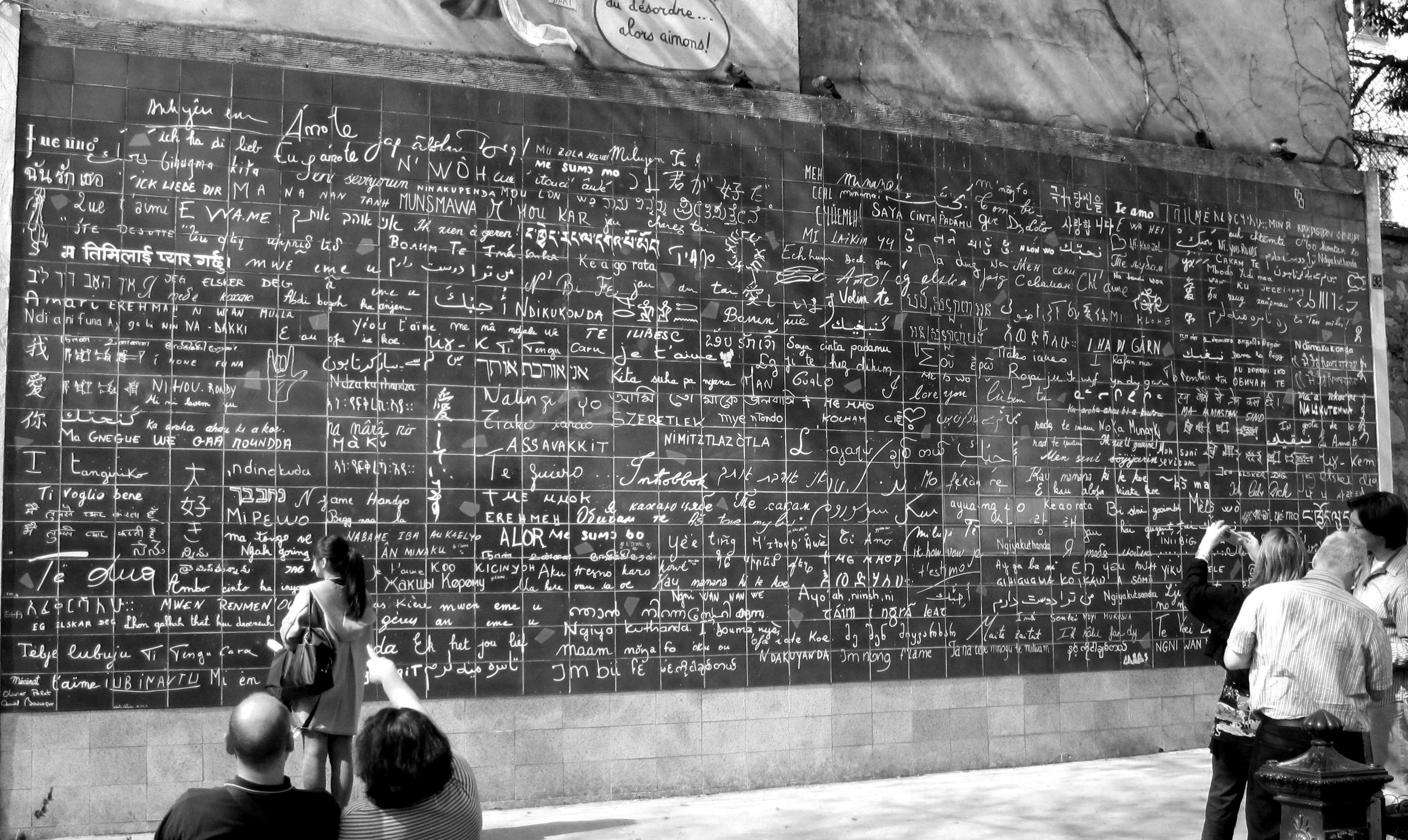

Figure 1 – The I Love You Wall, Le Mur Des Je TiAime with “I Love You” written in hundreds of languages, brainchild of Frederic Baron, in Paris, March 28, 2011. From the Wikimediacommons and uploaded by Oh Paris to Flickr under Creative Commons Attribution License.

In the last two blogs we’ve gone from the Empire State Building to the concept that a photograph is a five dimensional object. This merely states the obvious point that a photograph is a set pixels, which are laid out in a two-dimensional grid. Each position requires two numbers to define it, and three numbers to define brightness and color, the amounts of red, green, and blue. So we define each pixel in the image as a vector (x,y,r,g,b). And here is where the fun begins.

Let’s imagine that we add the physicist’s favorite fourth dimension, time, to the mix. So we wind up with a string of images, each taken at a different time. Wait a minute that’s a movie, and each pixel in the movie as (x,y,r,g,b,t), where t can be expressed either as a true time, say in ms, or as a frame number. A movie, which is really a type of photograph, is a 6-dimensional vector.

Well that’s a silent movie isn’t it? What about sound. We can certainly add sound to a movie. We had to add time first, because human perception of sound requires time. So do we just add sound as one more number to the mix. Well obviously not. Sound is not just intensity and more than light is, it’s also got frequency, which is a sound’s color. Do we need three numbers or coordinates to describe sound? That is are there three primary sound frequencies? Well like visual color, sound color is complicated and a mish-mash of psychology, physiology, and physics and way beyond what I want to discuss today. But, at a minimum, we know that the concept of primary sounds cannot be completely devoid of validity, because an A is an A regardless of the octave, and all (western) music can be written as a sequence of the notes of the chromatic scale. So let’s just say that we have to add n more dimensions or numbers to define the sound in a movie. So a sound movie is a form of photograph, which is 6+n dimensional.

For good measure, we can even make the movie 3D. A simple way to do that is to make it stereoscopic, taken from two slightly shifted positions. OMG this gives us a 7 + n dimensional photograph.

I know that all of this seems just a silly numbers game that restates in pretty terms the obvious. But there’s one more piece to the puzzle that we have to speak about before we can understand the why. This has to do with neuroplasticity, which is pretty much the ability of our brains, especially those of children to learn, adapt, and if necessary, to find new pathways. I read an article once by someone who had lost his hearing over the course of a few short weeks. To restore his hearing he had a cochlear implant,a device where the damaged parts of the ear are replaced by a microphone and electrodes that stimulate the neurons of the inner ear. When he woke up from surgery there was a cacophany of sound. He had to relearn how to hear because the new neuronal pathways, the circuits within his brain, were completely different than the ones that he previously used. But his brain was plastic, aka moldable, enough to relearn. A very similar things is going on with what are known as subretinal implants. Like a cochlear implant, a subretinal implant consists of a silicon wafer containing light sensitive microphotodiodes. These, generate electrical signals directly in response to light that directly stimulate the retinal cells. Humans have tremendous neural plastic abilities.

There is a fascinating article in July’s Scientific American by Gershon Dublon and Joseph Paradiso of the MIT Media Lab, called Extra Sensory Perception. and I recommend it highly to you, as it gives us a glimpse of where we are going.

The computer and the crude network of computers that we call the internet has profoundly altered our lives. Our children interact with a cadre of new devices with ease. And I would argue that as a result of when they were exposed to it, that they are programmed differently than we of an older generation are. Yet, we are all still pounding away at a key board, a single node in cyber space. Our children just pound more efficiently. You’ve all heard about the new Google Glasses. But just like our computer monitors whatever information we receive from these devices is reduced by our computer processor to some understandable two dimensional form – or as we have seen maybe five dimensional. As I sit here and pound away, robotic eyes of the Mars Rover are beaming back data to me. Well not really to me directly, because I do not have the right interface to receive these particular devices. But at the same time I am surrounded by other signals. I am bathed in them, in fact. Radios transmission, television transmissions, cell phone signals, motion detectors from my alarm system, WiFi signals from my computer and back from my internet service provider, GPS signals, etc., etc., etc. It’s almost frightening. Yet for every one of these signals, we need a dedicated device to interpret the signals. What if we could assimilate all of this information directly, what if we could in essence see it?

Such a direct assimilation would profoundly alter our definition of the phrase and concept of “being there.” How was it possible that we watched Neil Armstrong step onto the lunar surface in July of 1969. I remember thinking at the time how strange it was. It was as if I were actually there ahead of him. Today we watch live pictures stream back from Mars and from the giant planets. I had the same bizarre disconnect when I watched the very first images come back from the planet Jupiter. My original understanding of being there was changed forever. It became something so much profounder. In contrast to what will is, what will be is astounding – not even truly understandable yet. We cannot yet really make sense of the ways in which we will assimilate all of this information into a greater extrasensory perception.

The photograph in its broadest sense, defines what we mean by being there. First, we had still black and white photographs that capture an instant in time for ever. Then we made pictures 3D. Then we added color. then we added time. then we added sound. You can visualize a little dimension counter adding new dimensions with each of the last five sentences. We assimilated new information at each step and added new dimensions of perception. So when we ask what perception will mean in the hyperspace of the future, we have to at the same time ask what a photograph will be with these new dimensions added to it.

I like to think of the man sitting across the dinner table from a you woman with whom he is enamoured. He struggles with the question of whether to say “I love you.” It poses such a risk, such an act of no return. It occurs to him that perhaps he should say “Je t’aime” instead. As an English speaker he believes that there might be less risk in saying it in French. His five sense limit him. With his eyes he looks across the table and studies the moistness and sparkle in her eyes, the flush in her cheeks (maybe just the wine) and the way that she looks back at him. With his nose he sniffs for ambiguous pheromones hidden amidst the sweet smell of her perfume. He listens for cues, a subtle change in her voice perhaps a or a quickening in her breathing. He reaches across the table to touch her hand. Does he feel the hairs rise? He is not sure. Whatever, the futrure holds, the magic will still be there and someone, he or she, must always take the risk and leap across the emotional abyss.