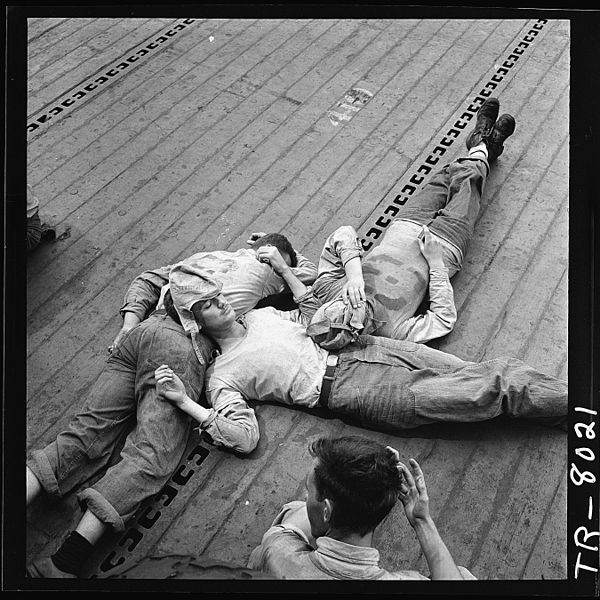

Figure 1 – Edward Steichen, “Sailors Sleeping on the Deck of the USS Lexington,” 1943, from US NARA and in the public domain,

Today is Saint Crispin’s day and 598 years ago on October 25, 1415 there took place one of the great epic battles of the Middle Ages, the Battle of Agincourt. This battle is highlighted in Shakespeare’s epic history “Henry V.” I point this out because one of the great soliloquies of that drama is Henry bemoaning the fact that slaves may sleep while kings pace the night sleeplessly.

Not all these, laid in bed majestical,

Can sleep so soundly as the wretched slave,

Who with a body fill’d and vacant mind

Gets him to rest, cramm’d with distressful bread;

Never sees horrid night, the child of hell,

But, like a lackey, from the rise to set

Sweats in the eye of Phoebus and all night

Sleeps in Elysium…

You may ask what is my point. Well, reader Megan has shared with me a wonderful portfolio of images by Dutch photographer Paul Schneggenburger, which are night long exposures of couples sleeping. They seem to be more like intricate dances – dances of sleep. The couple sleeps in Schneggenburger’s studio apartment, obviously black sheets. The room is lit with candles and the camera hangs over the bed and takes a six hour exposure. There is something sweet and wonderful here, maybe an ounce of voyeurism, perhaps reminiscent of Andy Warhol’s five hour and twenty minute film entitled “Sleep,” showing his friend John Giorno sleeping. But in the end, I think what strikes one the most is the sense of joyous peacefulness. Henry V was right. There are no kings here.