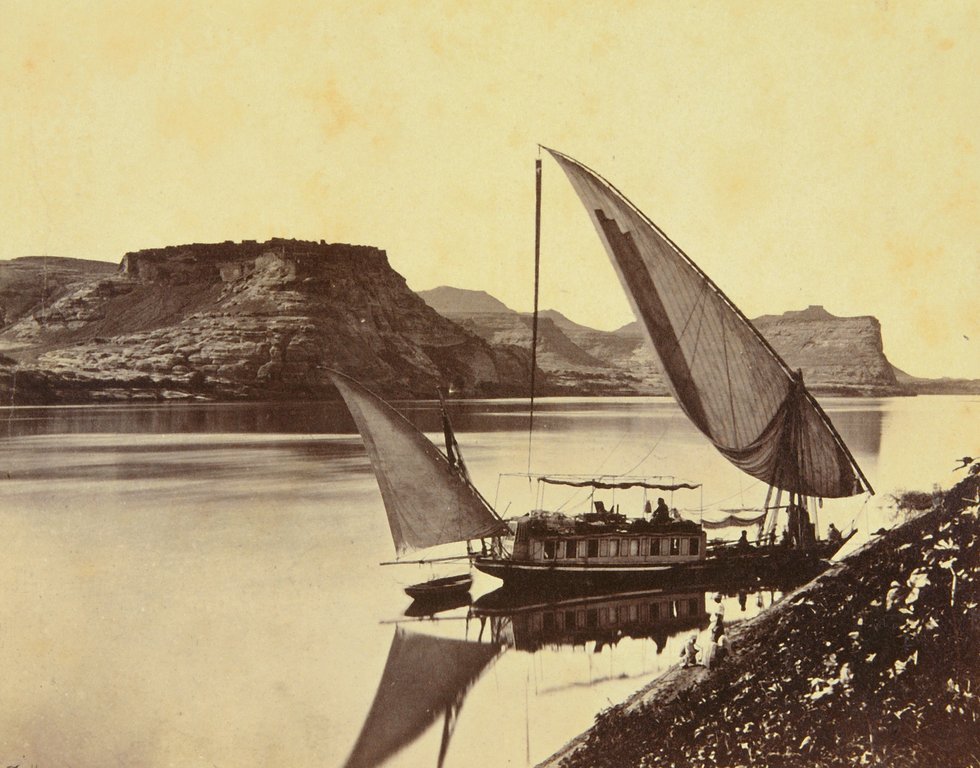

Figure 1 -Discarded antique belt wheels at the historic Damon Mill in Concord, MA. (c) DE Wolf 2019.

This past week, I made two photographic transitions.

First, I upgraded my failing IPhone 6 to an IPhone XSMax. The 6 represented a major advance in cell phone camera technology, and the 7 even more so. With the XSMax comes the best camera until, I suppose the XI. There are three cameras on the iPhone XS Max, two in the back and one in the front for selfies and facial recognition. Significantly for those of us who do a lot of post-processing, the dual rear cameras are at 12 megapixels, which some will recall was the transition point when digital started to be equivalent to film in resolution. The lenses are f/1.8 for the wide-angle camera and f/2.4 for the 2x “telephoto.” Let’s put the word telephoto in quotes, for photography buffs this is more of a “normal” lens. But I hasten to mention the fantastic capabilities of these cameras in terms of close-up and wide-angle ability. For me, there is no reason to carry any other cameras but my phone’s and my DSLR.

Of course, a lot of the value lies in the Artificial Intelligence. the AI, in the algorithms. Yes that again, friends! This is not your father’s camera, or at least not my father’s. This is “computational photography” and has a new feature called ‘Smart HDR’ where the phone begins capturing images as soon as you open the app not just when you push the shutter. Each image is a stream of images, one being chosen as “best.” But, I hasten to add you can change that later as you use the camera’s post-processing algorithms. By combining images the camera optimizes lighting and in the process avoids overexposure and shutter lag. While the images produced are only 8 bit per color plane, in my experience so far, the histograms are spot on, filling the dynamic range perfectly. Well enough said for now, I am having fun, and getting fabulous shots.

And as an example of image quality, I am including as Figure 1 a sepia toned black and white image of discarded antique belt wheels at the historic Damon Mill in Concord, MA taken with my IPhone XSMax and very minimally modified in Adobe Photoshop. I think the subject matter fitting. In its day, in the mid to late nineteenth century, these waterworks that, electricity free, powered the clothing industry of the Industrial Revolution were the height of technology, just as these new cellphone cameras are today.They are now discarded ornaments, which truly makes one wonder what is next!

Second, at the urging of a wise friend, I have started playing with the app PRISMA. This stylizes your images in various painterly fashions. According to Venture Beat, “PRISMA’s filter algorithms use a combination of convolutional neural networks and artificial intelligence, and it doesn’t simply apply a filter but actually scans the data in order to apply a style to a photo in a way that both works and impresses.” If you’re like me this tells you NOTHING. But the point is that these are not simple filters but AI neural networks applied to image modification. They are a lot of fun to use, and when you have a photo, which lacks a certain umph, you can often “jazz” it up with the PRISMA app. It is important, I believe, that the goal here is to achieve a beautiful and artistically pleasing image. Artistic photography is intrinsically nonlinear. Strict intensity and even spatial relationships are fundamentally lost in the processing.So there is nothing wrong with using modern image processing techniques to enhance the effects.

More importantly both the iPhone camera transition and the PRISMAand related apps transition truly represent a new world for the photograph, one where, along with the photographers brain, the camera itself has a brain that works in tandem with you. Of course, the beginnings of all of this rest historically with the development of autofocus and autoexposure back in the seventies. But really, it is a new world energized by neural networks and artificial intelligence. You may have wondered how I can write a blog about photography and futurism in the smae breath. Now you know!

As an illustration of this, Figure 2 shows The Old Salem District Courthouse in the Federal Street District of Salem, Massachusetts reflected in the window of a condominium. The scene struck me as ever so Gothic. I wasn’t quite satisfied with the original image. However, I was able to accentuate this feeling of medieval Gothis as well as to brighten up the tonality with the PRISMA Gothic filter.

Figure 2 – American Gothic, reflections of the Salem District Courthouse in a condominium window, modified using the PRISMA Gothic filter, Salem, Massachusetts. (c) DE Wolf 2019.